Top Posts

Most Shared

Most Discussed

Most Liked

Most Recent

By Paula Livingstone on July 18, 2023, 2:30 p.m.

Tagged with: Big Data Training Ethics Innovation Privacy Security Technology AI Research Future Machine Learning Optimization Architecture Networking Performance

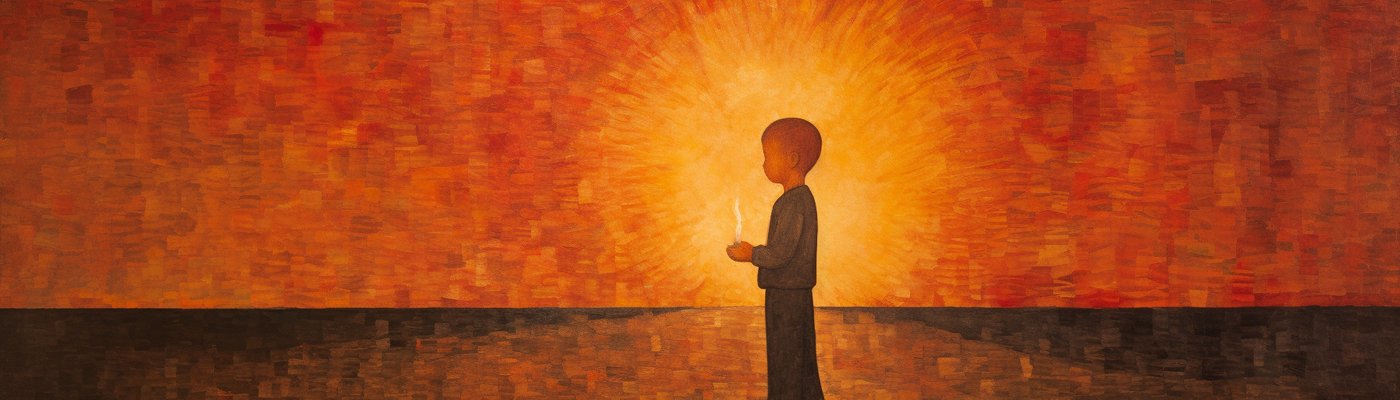

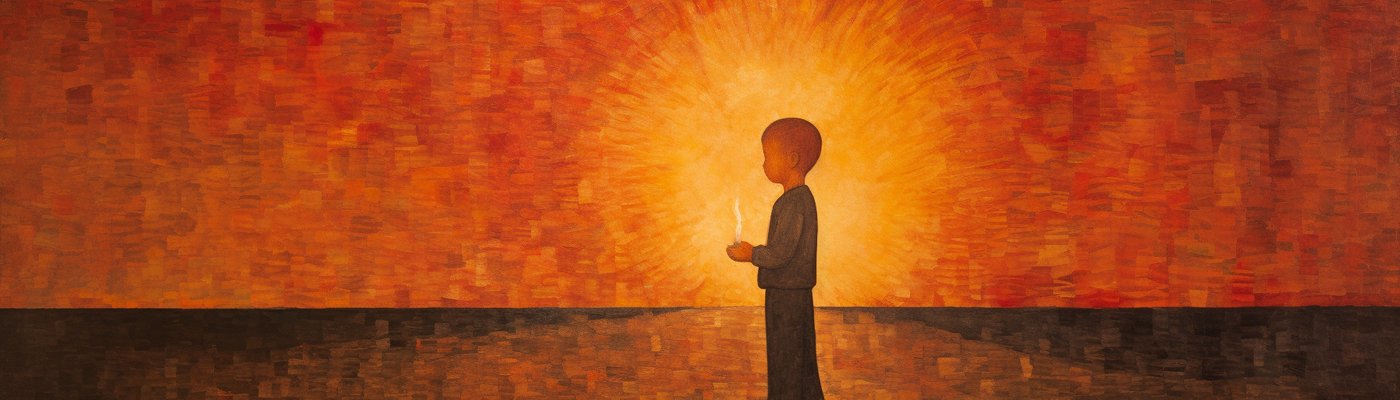

Welcome to "The Mind of the Machine: Unpacking Neural Network Language Models." This blog post aims to serve as a comprehensive guide to the intricate and fascinating world of neural networks, particularly focusing on their application in language modeling. The header image of a child holding a flame is a metaphorical representation of the trial-and-error learning process, a principle that lies at the core of neural networks.

Understanding neural networks is not just about knowing the technical jargon; it's about grasping the underlying principles that guide their operation. Each neuron, each layer, and each connection in a neural network serves a specific purpose. They come together to form a complex system capable of learning from data, making predictions, and even mimicking human-like cognition to some extent.

As we navigate through the various sections of this blog, we will break down the complexities into digestible parts. We'll start by exploring the biological inspirations behind neural networks, and then move on to how these networks model language. The aim is to provide you with a well-rounded understanding of the subject matter, whether you're a seasoned professional, a student, or just someone intrigued by the confluence of linguistics and technology.

Real-world applications of neural networks are vast and growing. From natural language processing in your smartphone's voice assistant to complex algorithms that analyze financial markets, the reach of neural networks is far and wide. This blog post will not only explain the theoretical aspects but will also delve into practical applications, supported by real-world case studies.

So, why should you invest your time in understanding neural networks and language models? The answer is simple: because they are shaping the future of technology. Whether it's automating mundane tasks or advancing scientific research, neural networks have a role to play. By the end of this blog post, you'll have the knowledge and insights to appreciate the transformative power of neural networks in today's digital age.

Now, without further ado, let's embark on this enlightening journey. Each section of this blog post has been carefully crafted to provide you with a thorough understanding of neural network language models, from their basic building blocks to their future potential. So, sit back, relax, and let's dive into the mind of the machine.

Similar Posts

Here are some other posts you might enjoy after enjoying this one.

Overview of Neural Networks

Neural networks form the backbone of many modern technologies, yet their inner workings often remain shrouded in mystery. To demystify this, let's start by understanding that a neural network is essentially a computational model. It's designed to recognize patterns, interpret data, or, in the context of our discussion, model language.

At its core, a neural network consists of layers of interconnected nodes, commonly referred to as neurons. These neurons are organized into three main layers: the input layer, the hidden layer(s), and the output layer. The input layer receives the initial data, the hidden layers process it, and the output layer provides the final result. For instance, in language modeling, the input could be a sequence of words, and the output might be the prediction of the next word in the sequence.

It's worth noting that neural networks are not a new concept. They've been around since the mid-20th century but gained significant traction with the advent of increased computational power and data availability. Today, they're used in a multitude of applications, from image recognition to natural language processing.

One of the most intriguing aspects of neural networks is their ability to learn from data. Unlike traditional algorithms that are explicitly programmed to perform a task, neural networks learn from examples. This is akin to how a child learns to speak not by memorizing grammar rules, but by listening to conversations and mimicking them.

However, not all neural networks are created equal. There are various architectures and types, each with its own set of advantages and disadvantages. As we proceed through this blog post, we'll delve deeper into these different types, focusing particularly on their application in language modeling.

So, to sum up this section, neural networks are versatile computational models capable of learning and adapting. They serve as the foundation for more specialized types like Recurrent Neural Networks, which we'll explore in detail later.

Biological Inspiration

The concept of neural networks didn't materialize out of thin air; it has its roots in biology. Specifically, these computational models are inspired by the neural structure of the human brain. Just as neurons in the brain are connected by synapses, the nodes in a neural network are linked by weighted connections.

While the human brain consists of approximately 86 billion neurons, even the most complex neural networks have far fewer nodes. Yet, the basic principle remains the same: information is processed through a series of interconnected units. In the brain, electrical signals travel from neuron to neuron, leading to thoughts, actions, and reactions. Similarly, in a neural network, data flows through nodes, undergoing transformations along the way.

It's crucial to clarify that the term 'neural network' in computing is more of an abstraction rather than a precise replication of biological neural systems. The mechanisms by which neurons in the brain process information are vastly more complex than their artificial counterparts. For instance, biological neurons can process information in a highly parallel manner, something that is still a challenge in the realm of artificial neural networks.

However, the biological inspiration serves as a useful metaphor for understanding how neural networks function. For example, consider the process of learning to ride a bicycle. Our brain receives input from our senses, processes it, and outputs actions like balancing and pedaling. In a similar fashion, a neural network can learn to recognize patterns or sequences in data and make predictions or decisions based on that.

As we delve further into the intricacies of neural networks, it's essential to keep this biological inspiration in mind. It not only provides a foundational understanding but also opens up avenues for future advancements. Researchers are continually looking to biology for insights into making neural networks more efficient and capable.

So, while neural networks may not fully emulate the complexity of biological systems, the inspiration drawn from nature is undeniable. This foundational concept serves as a stepping stone for the more specialized architectures and functionalities we'll explore in subsequent sections.

Language Modeling

Having established the biological underpinnings of neural networks, let's turn our attention to a specific application: language modeling. In essence, a language model is a type of neural network trained to predict the next word in a sequence based on the words that precede it. This is a cornerstone in the field of natural language processing (NLP), with applications ranging from machine translation to chatbots.

Language models operate by understanding the statistical properties of a language. They analyze vast amounts of text data to learn the likelihood of a particular word following a given sequence of words. For example, in the sentence "The cat is on the ___," a well-trained language model might predict the next word to be 'roof' or 'ground' based on the frequency of these word combinations in its training data.

However, the task is not as straightforward as it may seem. Language is inherently complex, filled with nuances, idioms, and contextual meanings. A simple statistical approach may not capture these subtleties. This is where neural networks, particularly more advanced types like Recurrent Neural Networks (RNNs), come into play.

Neural network-based language models have the advantage of being able to capture long-range dependencies in a sentence. For instance, the meaning of a word can be influenced by a word that appeared much earlier in the sentence. Traditional statistical models struggle with this, but neural networks excel at it.

It's worth mentioning that language models are not just limited to text. They can also be applied to other forms of sequential data, like time-series financial data or even genetic sequences. The principles remain the same: analyze the existing sequence to predict the next element.

As we move forward, we'll delve into the specific types of neural networks that are most effective for language modeling, such as RNNs and their variants. These specialized architectures offer the ability to capture the complexities of language, making them invaluable tools in the realm of NLP.

The Human Analogy

As we delve deeper into the subject of neural networks and language models, it's beneficial to draw parallels with human learning processes. Just as a child learns to speak by listening to conversations around them, neural networks learn from data. The header image of a child holding a flame symbolizes this trial-and-error learning process.

Consider how humans learn languages. We don't start by studying grammar rules; instead, we listen, mimic, and gradually understand the context and semantics. Similarly, neural networks learn by processing large datasets, making errors, and adjusting their internal parameters to improve.

However, human learning is not just about repetition and mimicry. It involves understanding, intuition, and the ability to generalize from specific instances to broader situations. Neural networks strive to achieve this level of sophistication through layers of computation and non-linear transformations.

It's also worth noting that humans use prior knowledge and context to make sense of new information. In a similar vein, some advanced neural networks use mechanisms like attention to weigh the importance of different pieces of input data.

As we explore more specialized types of neural networks, keep in mind this human analogy. It serves as a conceptual framework that can make complex algorithms more relatable and easier to understand.

Feed-forward Neural Networks

Having established the human analogy, let's now focus on one of the simplest types of neural networks: the feed-forward neural network. In these networks, data flows in one direction from the input layer through the hidden layers and finally to the output layer. There are no loops or recurrent connections, making them less complex but also less capable of handling certain types of data.

Feed-forward neural networks are often the first stop for beginners in the field. They are relatively easy to understand and implement. For example, they are commonly used in image classification tasks where the input is a fixed-size vector, and the output is a label indicating the type of object in the image.

However, their simplicity is both an advantage and a limitation. While they are computationally less intensive, they lack the ability to process sequences or remember past data, which is crucial for tasks like language modeling.

It's like learning to play a musical instrument. A beginner might start with simple scales and chords (akin to feed-forward networks), but to compose a symphony, one needs a deeper understanding of music theory and structure (akin to more complex networks like RNNs).

As we proceed, we'll explore how more complex architectures like Recurrent Neural Networks overcome these limitations, offering greater flexibility and capability in handling various types of data.

Recurrent Neural Networks (RNNs)

As we venture further into the landscape of neural networks, we encounter Recurrent Neural Networks, or RNNs. Unlike feed-forward networks, RNNs have connections that loop back within the network. This unique architecture allows them to maintain a 'memory' of previous inputs, making them particularly well-suited for tasks involving sequences, such as language modeling.

The recurrent connections enable RNNs to process sequences of varying lengths, a feature that is invaluable in natural language processing. For instance, when translating a sentence from one language to another, the order and context of words are crucial. RNNs can capture this context by remembering past words and using that information to influence future predictions.

However, RNNs are not without their challenges. They are notoriously difficult to train effectively due to issues like vanishing and exploding gradients. These problems arise because the recurrent nature of the network amplifies the effects of the weights during training, leading to numerical instability.

Despite these challenges, RNNs have found applications in a variety of fields beyond language modeling. They are used in time-series prediction, speech recognition, and even in generating music. Their ability to handle sequential data makes them versatile tools in the machine learning toolkit.

As we move forward, we'll delve into the specific architectures and training techniques that have been developed to mitigate some of the challenges associated with RNNs. These advancements have paved the way for more robust and efficient models.

So, while RNNs may seem complex and daunting, their potential applications are vast, making them a subject worthy of deep exploration. Their ability to process and remember sequences sets them apart from simpler architectures, providing a powerful mechanism for tackling a wide range of problems.

Other Variants

While RNNs have been a significant focus in the realm of neural networks for language modeling, they are by no means the only option. Several other variants have been developed to address specific challenges or to improve performance in particular tasks. These include Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs).

LSTMs, for example, were designed to combat the vanishing gradient problem that plagues simple RNNs. They introduce a more complex cell structure that allows for better retention of long-term dependencies. This makes them highly effective in tasks like machine translation where understanding the context over long sequences is crucial.

GRUs, on the other hand, offer a simpler architecture compared to LSTMs but achieve similar performance in many tasks. They are often preferred for applications where computational resources are limited, as they require fewer parameters to train.

There are also convolutional neural networks (CNNs), which are primarily used in image recognition but have found applications in NLP as well. Their ability to capture local features makes them useful in tasks like sentiment analysis.

As technology advances, we can expect the emergence of even more specialized neural network architectures. Each will have its own set of advantages and disadvantages, and the choice of which to use will depend on the specific requirements of the task at hand.

Understanding these variants is crucial for anyone serious about delving into neural networks for language modeling. Each offers unique capabilities and limitations, and a nuanced understanding of these can greatly aid in the effective application of neural networks in various domains.

Training and Testing

Having explored the different types of neural networks, it's time to discuss how these models actually learn from data. The process can be broadly divided into two phases: training and testing. During training, the model learns to make predictions or decisions based on input data, adjusting its internal parameters to minimize errors. During testing, the trained model is evaluated on new, unseen data to assess its performance.

The training phase is where the bulk of the computational work happens. The model processes the training data, makes predictions, and then adjusts its weights based on the difference between its predictions and the actual outcomes. This process is repeated multiple times, often in iterations called epochs, until the model's performance meets a predetermined criterion.

It's essential to monitor the model during training to avoid overfitting, a common pitfall where the model learns the training data too well but performs poorly on new data. Techniques like cross-validation and regularization are employed to mitigate this risk.

Once the model is trained, it undergoes testing to evaluate its generalization ability. This is a critical step, as a model that performs well during training but poorly during testing is of little practical use.

Metrics like accuracy, precision, and recall are commonly used to evaluate the model's performance. These metrics provide a quantitative measure of how well the model is doing and are crucial for comparing different models or architectures.

Training and testing are iterative processes. Based on the testing results, the model may go back for further training, parameter tuning, or even architectural changes. This iterative approach ensures that the model is continually refined and optimized for its intended application.

Backpropagation

Backpropagation, or "backward propagation of errors," is the cornerstone algorithm for training neural networks. It's the mechanism by which the network learns from its mistakes, adjusting its internal parameters to minimize the error between its predictions and the actual outcomes.

The process begins by feeding the neural network an input and letting it make a prediction. The error between the predicted and actual output is then calculated, usually using a loss function like Mean Squared Error for regression tasks or Cross-Entropy Loss for classification tasks.

Once the error is calculated, the next step is to propagate this error backward through the network. This involves computing the gradient of the loss function with respect to each weight by applying the chain rule, a fundamental principle in calculus.

The gradients represent how much each weight contributed to the error. The weights are then adjusted in the opposite direction of the gradient to minimize the error. This is typically done using optimization algorithms like Gradient Descent or its variants.

Backpropagation is computationally intensive but essential for training complex neural networks. It's the reason why neural networks can learn from data, adapt to new situations, and improve their performance over time.

Understanding backpropagation is crucial for anyone diving into neural networks, as it provides insights into how these models learn and adapt, thereby influencing their performance in tasks like language modeling.

Activation Functions

Activation functions play a pivotal role in neural networks, introducing non-linearity into the system. This non-linearity allows the network to learn from the error and make adjustments, which is essential for tasks like language modeling where the relationships between variables are not always straightforward.

Common activation functions include the Sigmoid, Tanh, and ReLU (Rectified Linear Unit). Each has its own set of advantages and disadvantages. For example, Sigmoid is often used in the output layer of binary classification problems but suffers from the vanishing gradient problem in deep networks.

Tanh, like Sigmoid, also suffers from vanishing gradients but is zero-centered, making it more efficient for backpropagation. ReLU, on the other hand, is computationally efficient and does not suffer from the vanishing gradient problem, but it's sensitive to outliers and can cause dead neurons during training.

Choosing the right activation function can significantly impact the performance of the neural network. It's not a one-size-fits-all situation; the choice often depends on the specific task, the architecture of the network, and the nature of the input data.

As we explore more complex architectures, you'll see how different activation functions are better suited for different layers or types of networks. Understanding these nuances is key to effectively training and optimizing neural network models for language modeling or any other task.

So, while activation functions may seem like a minor detail, they are fundamental to the functioning and performance of neural networks. They determine how the network responds to inputs, how easily it can be trained, and how well it generalizes to new data.

Architecture of RNNs

Having discussed the basics of neural networks, backpropagation, and activation functions, it's time to delve into the architecture of Recurrent Neural Networks (RNNs) in more detail. As mentioned earlier, RNNs are particularly well-suited for sequence-based tasks like language modeling due to their ability to maintain a 'memory' of past inputs.

The architecture of an RNN includes an input layer, one or more hidden layers, and an output layer. What sets it apart from feed-forward networks is the presence of recurrent connections in the hidden layers. These connections allow information to loop back into the network, providing a form of memory.

However, basic RNN architectures suffer from limitations like the vanishing and exploding gradient problems, making them less effective for long sequences. This led to the development of more advanced architectures like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which we discussed in the section on 'Other Variants.'

These advanced architectures introduce additional gates and parameters to control the flow of information through the network. For example, LSTMs have a cell state that runs parallel to the hidden state, allowing them to maintain information over longer sequences.

Understanding the architecture of RNNs and their variants is crucial for effective implementation and optimization. It influences everything from the choice of activation functions to the training algorithms used.

As we proceed, we'll explore how to train these complex architectures effectively, ensuring that they not only learn from the data but also generalize well to new, unseen data.

Training RNNs

Training Recurrent Neural Networks (RNNs) is a nuanced and challenging task. While the fundamental principles of training, such as backpropagation and gradient descent, remain the same, the recurrent nature of these networks introduces additional complexities.

One of the primary challenges is the vanishing and exploding gradient problems. These issues can make RNNs difficult to train effectively, particularly for long sequences. Various techniques have been developed to mitigate these problems, such as gradient clipping, which limits the size of the gradients to prevent them from becoming too large.

Another approach is to use more advanced architectures like Long Short-Term Memory (LSTM) networks or Gated Recurrent Units (GRUs). These architectures are designed to better capture long-term dependencies and are less susceptible to the vanishing gradient problem.

Regularization techniques like dropout can also be applied to prevent overfitting. In dropout, random neurons are "dropped out" during training, forcing the network to learn more robust features.

Hyperparameter tuning is another crucial aspect of training RNNs. Parameters like learning rate, batch size, and the number of hidden units can significantly impact the network's performance. It often requires multiple rounds of experimentation to find the optimal settings.

Training RNNs is both an art and a science. It requires a deep understanding of the architecture, the data, and the task at hand. With the right techniques and parameters, RNNs can be trained to perform exceptionally well on a wide range of sequence-based tasks.

Applications of RNNs

Recurrent Neural Networks (RNNs) are not just theoretical constructs; they have practical applications that touch various aspects of our daily lives. From natural language processing to financial forecasting, the utility of RNNs is far-reaching.

In the realm of language modeling, RNNs are used in machine translation, sentiment analysis, and chatbot development. Their ability to understand the context and sequence of words makes them ideal for these tasks.

Outside of language modeling, RNNs are used in time-series prediction, such as stock market forecasting. Their ability to remember past events allows them to make more accurate predictions about future trends.

RNNs also find applications in the healthcare sector for tasks like patient monitoring and disease prediction. Their ability to process sequential data makes them well-suited for analyzing time-series medical data like ECG readings.

In the entertainment industry, RNNs are used in the generation of music and art. They can learn the patterns and styles from existing works and create new compositions that are both novel and coherent.

The applications of RNNs are continually expanding as researchers find new ways to utilize their unique capabilities. As technology advances, we can expect to see RNNs integrated into even more aspects of our lives, making them an essential tool in the modern data-driven world.

Attention Mechanisms

As we delve deeper into the complexities of neural networks, particularly in the context of language modeling, we encounter the concept of attention mechanisms. These are advanced techniques that allow the network to focus on specific parts of the input when making predictions or decisions.

Attention mechanisms were inspired by the human visual attention system. Just as we don't process every detail in our visual field with equal focus, attention mechanisms allow neural networks to weigh different parts of the input differently.

In language modeling, attention mechanisms can significantly improve the performance of RNNs. They allow the network to focus on relevant words or phrases when making predictions, thereby capturing the context more effectively.

For example, in machine translation, an attention mechanism can help the model focus on the subject of a sentence when translating it, ensuring that the translation remains coherent and contextually accurate.

Attention mechanisms have also found applications outside of language modeling, such as in image recognition and time-series prediction. They provide a way to handle the increasing complexity of data and tasks, making them a crucial component in the development of more advanced neural network architectures.

Understanding attention mechanisms is essential for anyone serious about mastering neural networks for language modeling. They offer

Transfer Learning

As we navigate the intricate world of neural networks, another pivotal concept comes into play: Transfer Learning. This technique involves taking a pre-trained model and fine-tuning it for a different but related task. It's akin to applying knowledge gained in one domain to solve problems in another.

Transfer learning is particularly useful in scenarios where data is scarce or expensive to collect. For example, a language model trained on a large corpus of text can be fine-tuned to perform sentiment analysis on product reviews, thereby saving both time and computational resources.

The underlying principle is that the features learned by the model in its original task are general enough to be useful in other tasks. This is especially true for the lower layers of the network, which often capture basic features like edges in images or common phrases in text.

However, transfer learning is not a silver bullet. It requires careful consideration of the similarities between the source and target tasks. If the tasks are too dissimilar, transferring knowledge may not yield significant benefits and could even impair performance.

Despite these challenges, transfer learning has proven to be a powerful tool in the machine learning arsenal. It has been successfully applied in various fields, from natural language processing to computer vision, and continues to be an area of active research.

Understanding the intricacies of transfer learning can provide a significant advantage when working with neural networks, especially in resource-constrained environments or specialized applications.

Case Study 1

Now that we've covered the theoretical aspects of neural networks and language models, let's delve into a practical example. In this case study, we'll explore the application of RNNs in machine translation.

Machine translation is a complex task that involves understanding the syntax, semantics, and context of both the source and target languages. Traditional methods like rule-based systems were limited in their capabilities, often producing translations that were technically correct but lacked nuance.

RNNs, particularly those with attention mechanisms, have revolutionized this field. They can capture the context and produce translations that are not only accurate but also contextually appropriate.

For instance, a sentence like "She is a star" could be translated into French as "Elle est une etoile" or "Elle est une vedette," depending on the context. An RNN with attention mechanisms can make this distinction based on the surrounding sentences, providing a more accurate translation.

This case study serves as a testament to the capabilities of modern neural networks in handling complex, real-world tasks. It illustrates the transformative impact these technologies can have, not just in academia but in practical, everyday applications.

By examining real-world applications through case studies, we gain a deeper understanding of the theoretical concepts discussed earlier. It bridges the gap between theory and practice, providing valuable insights into the capabilities and limitations of neural networks.

Case Study 2

Continuing with our exploration of practical applications, let's consider another case study, this time focusing on the use of neural networks in healthcare, specifically in predicting patient outcomes based on medical records.

Healthcare is a field ripe for the application of machine learning techniques, including neural networks. The ability to accurately predict patient outcomes based on historical data can significantly improve healthcare delivery and patient care.

RNNs are particularly well-suited for this task due to their ability to process sequential data. Medical records often consist of time-series data, such as lab test results, that are crucial for diagnosis and treatment planning.

For example, an RNN can be trained to predict the likelihood of a patient developing a particular condition based on their medical history. This could enable early intervention and potentially save lives.

This case study highlights the versatility of neural networks and their potential to make a meaningful impact in various fields. It serves as a compelling example of how these technologies can be applied to solve real-world problems, improving both efficiency and outcomes.

Through these case studies, we can appreciate the practical implications of the theoretical concepts we've discussed. They offer a tangible demonstration of the power and potential of neural networks in diverse applications.

Future of Neural Network Language Models

As we reach the conclusion of our exploration into neural networks and language models, it's worth pondering what the future holds. The field is rapidly evolving, with new architectures, training techniques, and applications emerging regularly.

One of the most exciting prospects is the integration of neural networks into everyday technologies. We're already seeing this with voice-activated assistants and recommendation systems, but the potential applications are vast. From personalized education platforms to advanced healthcare diagnostics, the possibilities are endless.

Another area of interest is the development of more efficient training algorithms. As neural networks grow in complexity, the computational resources required for training also increase. Researchers are actively working on methods to reduce this computational burden without sacrificing performance.

There's also a growing focus on interpretability and ethical considerations. As neural networks become more integrated into decision-making processes, understanding how they arrive at conclusions becomes crucial. This is especially true in sensitive areas like healthcare and criminal justice, where the stakes are high.

Moreover, the rise of transfer learning and multi-modal models, which can process different types of data like text, images, and sound, opens up new avenues for research and application. These models could revolutionize fields like autonomous driving and real-time translation services.

As we look to the future, it's clear that neural networks and language models will continue to play a significant role in technological advancements. Their potential to transform various aspects of our lives makes them not just an academic curiosity but a critical component of the next wave of digital innovation.

Final Thoughts

As we come to the end of our comprehensive journey through neural networks and language models, it's evident that we are standing on the cusp of a technological revolution. The advancements in this field are not just incremental; they are transformative, reshaping how we interact with machines and even with each other.

The power of neural networks lies in their adaptability and learning capabilities. They can be applied to a myriad of tasks, from simple pattern recognition to complex decision-making processes. Their versatility makes them invaluable tools in our increasingly data-driven world.

However, it's essential to approach this technology with a balanced perspective. While neural networks offer immense potential benefits, they also pose ethical and societal challenges that need to be addressed. Issues like data privacy, algorithmic bias, and the potential for misuse cannot be ignored.

As we continue to push the boundaries of what neural networks can do, it's crucial to involve a diverse set of perspectives in the conversation. This includes not just computer scientists and engineers but also ethicists, policymakers, and the general public.

By doing so, we can ensure that the development and deployment of these technologies are guided by a set of shared values, maximizing their benefits while minimizing potential risks.

Wrap-Up

In this extensive exploration, we've covered everything from the basic principles of neural networks to their advanced applications in language modeling and beyond. We've delved into the intricacies of training, the challenges of implementation, and the ethical considerations that come with this powerful technology.

While the landscape of neural networks is ever-changing, the fundamental principles remain the same. Understanding these principles is key to navigating the complexities of this field, whether you're a seasoned expert or a curious newcomer.

As we look forward to the future, one thing is clear: neural networks and language models are here to stay. They will continue to evolve, grow in complexity, and find new applications, enriching our lives in ways we can't yet fully comprehend.

Thank you for joining me on this enlightening journey. I hope this blog post has provided you with a robust understanding of neural networks and language models, equipping you with the knowledge to explore this fascinating field further.

Start the discussion

Post Discussion

Reply to

Want to get in touch?

I'm always happy to hear from people. If youre interested in dicussing something you've seen on the site or would like to make contact, fill the contact form and I'll be in touch.

No comments yet. Why not be the first to comment?