Top Posts

Most Shared

Most Discussed

Most Liked

Most Recent

Post Categories:

AI Cyber Security Futurism Identity Industrial Networking Research Society TechnologyPost Views: 2333

Post Likes: 163

By Paula Livingstone on Sept. 2, 2024, 12:23 p.m.

Tagged with: Threat Modeling ICS Cryptography Decentralization Innovation Integration Cybersecurity Privacy Security Society Technology AI Research Future IOT Supply Chain Risk Management Standards Threat Detection Industry 4.0 Critical Infrastructure Vulnerabilities Attack Surface Cyber-Physical Systems SCADA Standards Bodies Industrial Control Systems (Ics)

AI security is no longer just about data protection and software vulnerabilities, it is about industrial resilience, operational integrity, and regulatory survival.

As artificial intelligence embeds itself deeper into critical systems, from power grids to global banking infrastructure, the risks will extend far beyond stolen data or compromised privacy. A failure in an AI-driven decision system could soon lead to cascading financial crises, industrial sabotage, or even national security breaches. The stakes will have shifted.

Yet, many organisations continue to approach AI security as if it were just another IT concern, failing to see the broader implications for operational technology and real-world stability. That is if they have even considered it at all yet. This post examines the industrial security paradigm for AI, mapping the regulatory landscape, emerging threat models, and the engineering principles required to make AI not just defensible, but resilient in the face of attack.

Similar Posts

Here are some other posts you might enjoy after enjoying this one.

AI is No Longer Just an IT Risk, It is an Industrial Challenge

The days of treating AI security as just another IT concern are over. Artificial intelligence has moved far beyond corporate data centres and SaaS platforms, it is now embedded within power grids, financial trading systems, healthcare diagnostics, and industrial control networks. A failure in an AI model no longer just exposes sensitive data; it can shut down factories, manipulate stock markets, or compromise national infrastructure. The stakes have never been higher.

Traditional cybersecurity defences were not designed for AI. Unlike conventional software, machine learning models learn from data, adapt dynamically, and are vulnerable to entirely new classes of attacks. Adversaries are already exploiting these weaknesses, using data poisoning, adversarial inputs, and model inversion to manipulate AI decision-making at the industrial level. The consequences are no longer theoretical, real-world breaches are already happening.

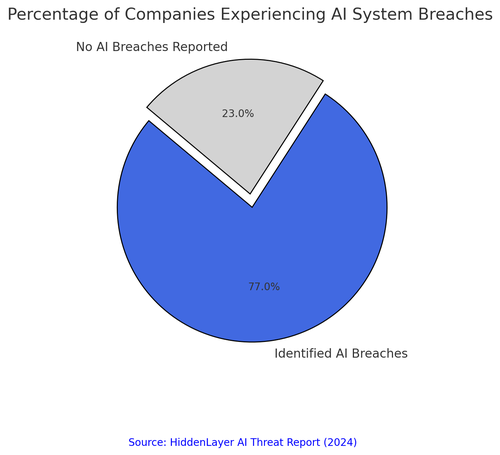

Recent findings indicate that 77% of companies have experienced AI-related security breaches in just the past year.

This statistic, captured in the accompanying visual, highlights how widespread the problem has become. Attackers are targeting AI models at every stage, compromising training data, inserting backdoors, and exploiting weaknesses in AI-powered automation. The reality is stark: most organisations are deploying AI without securing it.As AI continues to integrate into critical sectors, security strategies must evolve. Industrial resilience, operational integrity, and regulatory survival now depend on treating AI security as a core component of infrastructure protection, not just another IT issue. The question is no longer whether AI will be targeted, but how prepared we are when it is.

The Convergence of AI and Industrial Cybersecurity

AI is no longer a passive tool confined to data analytics, it is now actively shaping industrial processes, automating decision-making, and managing critical infrastructure. This transformation demands a shift in how we approach security. The traditional separation between IT cybersecurity and operational technology (OT) security is no longer sustainable. AI is the bridge that connects these worlds, and with it comes an entirely new attack surface.

Organisations have historically relied on segmented security frameworks to protect industrial environments. Firewalls, network segmentation, and air-gapped systems were once enough to keep threats at bay. But the integration of AI into SCADA systems, smart grids, predictive maintenance, and financial risk modelling has created new vulnerabilities that attackers are learning to exploit. Adversarial manipulation of AI models can lead to cascading failures in systems once thought to be isolated from digital threats.

The urgency of this convergence is being recognised at the highest levels of security governance. IEC 62443, NIS2, DORA, and the EU AI Act are rapidly reshaping how AI security is regulated, shifting the focus from reactive IT security to proactive industrial resilience. The need to harden AI against adversarial attacks is no longer theoretical, it is a regulatory imperative. AI cannot be secured as an afterthought; it must be embedded into industrial cybersecurity strategies from the ground up.

As AI becomes the backbone of industrial and financial systems, security leaders must rethink their approach. The future will belong to those who understand that AI security is not just about protecting models, it is about safeguarding the integrity of entire industries. The convergence of AI and industrial cybersecurity is not optional; it is inevitable, and failure to adapt will leave critical systems exposed to threats we are only beginning to understand.

IEC 62443 and AI Cybersecurity, Building Resilient AI for Industrial Systems

Industrial cybersecurity has long been governed by structured frameworks designed to secure operational technology (OT) environments. Among them, IEC 62443 stands as the gold standard for securing industrial control systems (ICS). But AI’s growing presence in these environments is stretching the framework in new directions. The question now is: how do we apply IEC 62443 to AI-driven industrial systems?

The fundamental principles of IEC 62443, defence in depth, secure zones, and access control, are directly relevant to AI security. AI models in ICS and SCADA environments must be treated as critical assets, protected with the same rigour as PLCs, sensors, and industrial networks. This means enforcing strict access control over AI training data, ensuring secure inference environments, and continuously monitoring AI model behaviour for adversarial manipulation.

Yet, AI introduces challenges that IEC 62443 was not originally designed for. Traditional OT security assumes static systems with clear perimeters, but AI models are dynamic, continuously learning, and highly dependent on data integrity. Attackers can manipulate training datasets, inject poisoned sensor readings, or exploit AI model drift to induce dangerous operational failures. This calls for an evolution of IEC 62443 compliance, one that integrates AI anomaly detection, adversarial training, and cryptographically verifiable model integrity.

Regulators and industry leaders are now recognising the urgency of securing AI within the IEC 62443 framework. AI in industrial environments must be hardened against adversarial threats, its decision-making processes must be explainable and auditable, and its deployment must align with zero-trust principles. As AI takes a more dominant role in critical infrastructure, applying IEC 62443 to AI security is no longer just good practice, it is essential for preventing catastrophic failures.

NIS2 and the Expansion of AI as Critical Infrastructure

Europe’s NIS2 Directive is redefining what it means to be critical infrastructure, and AI is now squarely in its crosshairs. Originally designed to improve cybersecurity across essential sectors, NIS2 has expanded its scope to include AI-driven automation, cloud services, and high-risk digital platforms. The message is clear: AI is now a critical dependency, and its security must be treated as a national priority.

The shift is long overdue. AI is already at the heart of smart grids, transport logistics, financial risk modelling, and healthcare diagnostics. If these systems fail due to AI exploitation, whether through data poisoning, adversarial attacks, or manipulation of predictive models, the consequences extend beyond financial loss to disruptions in energy supply, medical misdiagnoses, and breakdowns in national security frameworks.

Under NIS2, organisations using AI in critical sectors are now subject to stricter security controls, mandatory incident reporting, and resilience obligations. This is a radical departure from the largely voluntary AI security guidelines of the past. Companies deploying AI in critical infrastructure will need to demonstrate robust cybersecurity measures, AI model integrity verification, and compliance with evolving EU AI governance frameworks.

As AI becomes more deeply integrated into industrial processes, regulatory oversight will only tighten. The introduction of NIS2 is just the beginning, the next phase will demand AI systems that are resilient by design, transparent in their decision-making, and hardened against adversarial manipulation. The AI arms race is no longer just about innovation; it is about survival in an era where AI is both the attack vector and the defence mechanism.

DORA and the Financial Sector’s AI Risk

The Digital Operational Resilience Act (DORA) is set to redefine financial sector security, and AI is firmly in the spotlight. As financial institutions become increasingly dependent on AI-driven risk assessment, fraud detection, and algorithmic trading, DORA enforces strict resilience standards, treating AI security as a fundamental requirement for financial stability. The reason is simple: AI is no longer just analysing transactions, it is controlling them.

Finance is one of the most AI-dependent industries, but it is also one of the most targeted. Attackers are already exploiting AI vulnerabilities to bypass fraud detection algorithms, manipulate credit scoring models, and execute adversarial attacks against automated trading systems. The risk isn’t theoretical, real-world AI-driven fraud cases are rising, and regulators are now enforcing resilience obligations to counter these evolving threats.

DORA mandates that financial institutions establish continuous AI risk monitoring, adversarial testing, and explainable decision-making processes. Firms must prove that their AI models are not vulnerable to manipulation, biased in their assessments, or exposed to data poisoning. This moves AI security from an IT afterthought to a compliance necessity, financial organisations must now audit, test, and harden their AI models just as rigorously as they do their core financial systems.

The financial sector has always been an arms race between defenders and attackers, but AI changes the battlefield. As DORA enforces accountability, resilience, and regulatory scrutiny, financial institutions will have to redesign their AI security posture to withstand not just conventional cyber threats but the emerging era of AI-powered financial warfare.

The Expanding Attack Surface, AI in Industrial Environments

AI is now woven into the fabric of industrial operations, from predictive maintenance in manufacturing to real-time anomaly detection in energy grids. While this promises efficiency gains, it also expands the attack surface, exposing AI models to threats that industrial security frameworks were never designed to handle. The risks are no longer hypothetical, attackers are actively exploiting AI-driven automation to disrupt industrial processes.

The threats are diverse. Data poisoning allows adversaries to manipulate AI models by corrupting training datasets, causing misclassifications that can lead to faulty predictive maintenance or incorrect industrial diagnostics. Adversarial perturbations can trick AI-powered security cameras into ignoring trespassers or misidentifying operational hazards, while model inversion attacks allow attackers to extract sensitive operational data from AI models, exposing proprietary industrial processes.

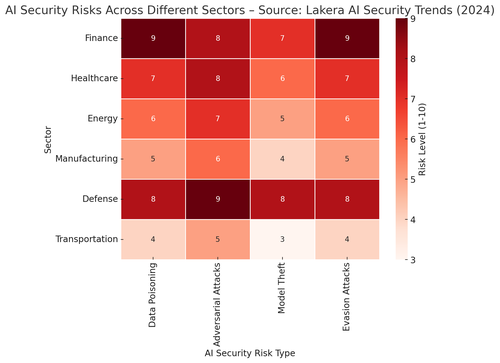

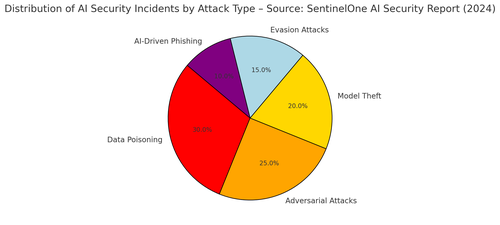

The accompanying visual illustrates how these AI-specific attack methods distribute across different industrial sectors, highlighting which industries are most exposed to each risk type.

Finance and defence face high exposure to model theft, while healthcare and energy sectors are particularly vulnerable to adversarial attacks. Understanding these attack vectors is no longer just an academic exercise, it is essential for designing robust AI defences that can withstand targeted exploitation.As AI becomes an operational dependency in industrial environments, organisations must adapt their security strategies. Traditional IT firewalls and network segmentation will not protect against adversarial ML attacks. Instead, securing AI requires robust model integrity verification, continuous adversarial stress testing, and real-time anomaly detection to catch AI manipulation in action. Without these defences, the AI-driven industrial revolution could become an AI-driven industrial crisis.

AI Supply Chain Risk – The Trojan Horse Inside Industrial Systems

AI does not exist in isolation. The models deployed in industrial systems, financial institutions, and national security infrastructure are often built on third-party frameworks, trained on external datasets, and integrated with cloud-based services. This opens the door to one of the most overlooked threats in AI security: supply chain risk. If attackers can compromise AI models at any point in this chain, they can embed vulnerabilities directly into critical systems.

Unlike traditional supply chain risks that focus on hardware integrity and software dependencies, AI introduces new, harder-to-detect attack vectors. A compromised machine learning model might function normally during validation but produce biased or manipulated outputs when deployed in real-world environments. Attackers can embed backdoors into pre-trained models, alter training datasets to introduce systemic biases, or even manipulate cloud-hosted AI services to leak sensitive data.

The accompanying visual demonstrates how AI supply chain risks manifest in different industries, breaking down the types of attacks most commonly exploited. Financial institutions face the highest risk from model theft and adversarial manipulation, while industrial control systems are particularly vulnerable to data poisoning and corrupted training pipelines. Without strict validation procedures and cryptographic integrity checks, organisations may be deploying AI systems that have already been compromised before they even go live.

Regulators are beginning to take AI supply chain security seriously. Proposed updates to IEC 62443 and NIS2 include stricter auditing requirements for third-party AI models, and the financial sector is moving toward AI model certification frameworks under DORA. But the reality is that AI security starts long before deployment, it begins with rigorous supply chain validation, continuous threat monitoring, and a zero-trust approach to external AI integrations.

The Engineering of Secure AI, Building for Industrial-Grade Resilience

Defending AI is not just about reacting to threats, it is about engineering resilience into AI systems from the ground up. Unlike traditional cybersecurity, where security patches and software updates can mitigate vulnerabilities after deployment, AI security failures can be baked into the model itself, making them far harder to detect and remediate. For AI in critical infrastructure, this means security must be designed into every phase of AI development, deployment, and monitoring.

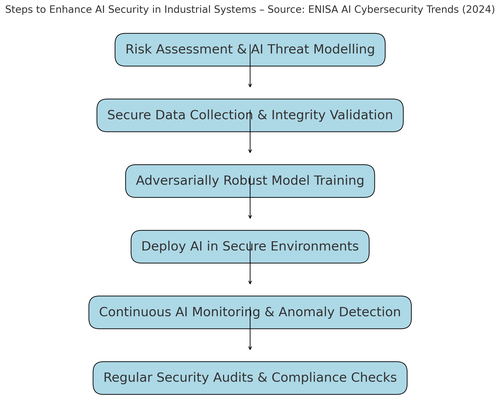

The principles of industrial cybersecurity, defence in depth, anomaly detection, and zero trust architectures, must now extend to AI systems. This requires a multi-layered approach, starting with secure training data pipelines, robust model verification techniques, and continuous monitoring to detect adversarial manipulation. As the accompanying flowchart illustrates, securing AI is not a single step but an ongoing engineering process that must be embedded into industrial and financial systems from day one.

One of the most effective approaches is adversarial training, where AI models are stress-tested against known attack techniques before deployment. Another critical layer is cryptographically verifiable model integrity, ensuring that AI models have not been tampered with before execution. Secure execution environments, such as trusted enclaves (e.g., Intel SGX, AMD SEV), can further protect AI from memory-based attacks, making real-time model exploitation significantly harder.

Industrial AI security is no longer just an emerging field, it is a necessity. As AI takes on mission-critical decision-making roles, resilience must be engineered into its very architecture, not patched as an afterthought. The organisations that thrive in this new era will be those that approach AI security not just as a compliance requirement, but as an engineering discipline in its own right.

From AI Governance to AI Resilience, Preparing for AI-Powered Threats

AI security is no longer just about governance, it is about surviving in a threat landscape where AI itself is becoming a weapon. Attackers are already using AI to automate cybercrime, create deepfake fraud schemes, and manipulate AI-driven financial models. The evolution of AI-powered malware, automated phishing campaigns, and model inversion attacks means that AI security must now shift from passive compliance to active resilience.

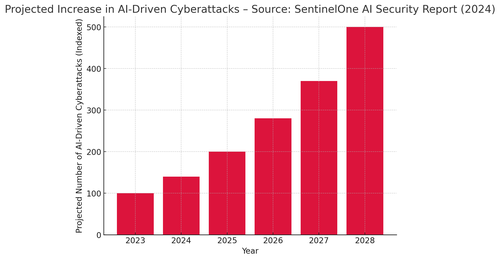

The scale of the challenge is growing at an alarming rate. As the accompanying bar chart illustrates, the number of AI-driven cyberattacks is projected to rise dramatically over the next five years.

Threat actors are leveraging AI to bypass traditional security measures, generate undetectable exploits, and automate large-scale attacks. This is no longer a hypothetical concern, AI-powered attacks are already being deployed in espionage, financial fraud, and industrial sabotage.Defending against these threats requires a radical shift in security strategy. AI-driven cyberattacks can only be countered by AI-driven defences. This means investing in autonomous cybersecurity solutions, real-time anomaly detection, and adversarial AI testing. Organisations must move beyond static rule-based security models and embrace continuous, adaptive AI security, where AI itself learns to detect and neutralise adversarial behaviour in real time.

The next frontier of AI security will be an arms race between attackers using AI for exploitation and defenders using AI for resilience. The organisations that succeed will be those that treat AI security not as a checkbox for compliance, but as a strategic imperative. As cyber threats become increasingly automated, the only viable defence is to ensure that security moves as fast as the attackers do.

The Regulatory Battlefield, How Governments are Securing AI

Governments are no longer waiting for the private sector to solve AI security, they are stepping in with hard rules and regulatory enforcement. The EU AI Act, NIST AI Risk Management Framework, and DHS AI Security Playbook are just the beginning. These policies are shifting AI security from an industry concern to a national and global priority. Organisations that fail to comply will not just face cybersecurity risks, they will face legal and financial consequences.

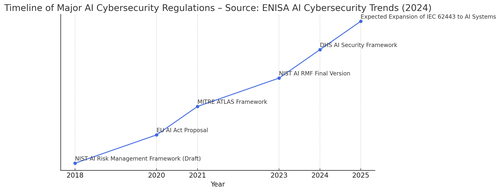

The regulatory landscape is evolving rapidly. As the accompanying timeline visual demonstrates, the past five years have seen an explosion of AI security regulations, moving from voluntary guidelines to mandated compliance. The EU AI Act introduces strict rules for high-risk AI systems, while NIST’s AI RMF lays out detailed risk management requirements. Meanwhile, DORA, IEC 62443, and NIS2 are forcing AI security frameworks into critical infrastructure and financial sectors.

These regulations demand more than just theoretical security controls. AI-driven systems must now be explainable, auditable, and resistant to adversarial manipulation. Compliance requirements include regular AI stress testing, transparent decision-making models, and strict supply chain security for third-party AI components. Organisations must now prove that their AI models are not just operationally efficient, but also secure, unbiased, and resilient.

We are entering a new phase of AI security, one where governments are not just setting guidelines, but actively enforcing AI safety standards. As AI becomes embedded in critical infrastructure, financial systems, and national security, regulation will only intensify. The message is clear: adapt now, or risk being left behind in an era where AI security is no longer optional, it is the law.

Conclusion – The Future of AI Security is Industrial Resilience

AI security is no longer just about data protection and software vulnerabilities, it is about industrial resilience, operational integrity, and regulatory survival. AI is now embedded in power grids, financial markets, healthcare systems, and national security infrastructure. A failure in AI security is no longer a technical issue, it is a systemic risk with real-world consequences.

The expansion of NIS2, DORA, and the EU AI Act signals a new reality: AI security is not optional, it is mandatory. Organisations must move beyond reactive cybersecurity and embrace proactive AI resilience engineering, treating AI security as an integral part of industrial defence strategies. IEC 62443 is evolving to incorporate AI, and financial institutions must now prove their AI models are adversarially robust before they are deployed at scale.

For AI security to succeed, it must be built on continuous monitoring, adversarial stress testing, and real-time anomaly detection. AI-powered cyber threats are already here, and defensive AI must evolve faster than the attackers. The organisations that survive this new era will be those that recognise AI security as a battlefield, not a compliance checkbox.

The future of AI security belongs to those who engineer resilience, not just innovation. We are moving into a world where AI is not just an asset, it is a liability if left unsecured. The choice is simple: harden AI now, or face the consequences when it fails.

Start the discussion

Post Discussion

Reply to

Want to get in touch?

I'm always happy to hear from people. If youre interested in dicussing something you've seen on the site or would like to make contact, fill the contact form and I'll be in touch.

No comments yet. Why not be the first to comment?