Top Posts

Most Shared

Most Discussed

Most Liked

Most Recent

Post Categories:

AI Cryptography Cyber Security Data Analysis Futurism Governance Risk Technology ThreatPost Views: 1915

Post Likes: 27

By Paula Livingstone on Aug. 16, 2024, 2:20 p.m.

Tagged with: Cybersecurity Privacy Security Society Technology AI Future Machine Learning Risk Management Threat Detection Critical Infrastructure Anomaly Detection Attack Surface Data Data Manipulation Encryption Epoch Governance

Artificial intelligence is not just a tool,it’s an evolving entity reshaping the digital cybersecurity adversarial space.

Its rise has introduced unprecedented capabilities, but with them come security risks that even the most advanced defences struggle to contain. The very mechanisms that make AI powerful,its adaptability, automation, and ability to process vast amounts of data,also make it vulnerable in ways we are only beginning to understand. Threat actors are already exploiting these weaknesses, embedding adversarial exploits, poisoning datasets, and manipulating AI-generated outputs to deceive and disrupt.

As AI continues its rapid integration into critical infrastructure, finance, healthcare, and cybersecurity itself, accelerated in large part by the financial benefits it promises by cutitng costs so markedly, the stakes are rising. The question is no longer whether AI can be secured but rather whether traditional security models are equipped to handle the challenges it presents. Understanding these risks is the first step in building defences that can keep pace with AI’s relentless evolution.

Similar Posts

Here are some other posts you might enjoy after enjoying this one.

Introduction to Artificial Intelligence Security Risks

Artificial intelligence has transitioned from a futuristic concept to what looks like it will become an integral part of modern infrastructure. From automating business operations to enhancing cybersecurity itself, AI’s capabilities are expanding at an extraordinary pace and that journey is just getting started. However, this rapid adoption has also introduced vulnerabilities that challenge traditional security frameworks. The very properties that make AI powerful,its ability to learn, adapt, and generate,are also the traits that expose it to novel attack vectors.

One of the fundamental concerns is that AI systems operate on vast amounts of data, often aggregated from diverse sources. If these sources contain biased, misleading, or malicious information, the AI model may produce unreliable or even dangerous outputs. Attackers can exploit these weaknesses through methods like data poisoning, where training datasets are subtly manipulated to alter AI behaviour in unintended ways. Unlike conventional software vulnerabilities, these issues do not stem from misconfigurations but from the model’s own learning process.

Beyond data manipulation, the opacity of AI decision-making further complicates security. Many advanced models, particularly deep learning systems, function as black boxes, making it difficult to interpret how they arrive at their conclusions. This lack of transparency creates blind spots that adversaries can leverage, crafting inputs specifically designed to mislead the AI. As AI adoption accelerates across industries, ensuring the integrity and accountability of these systems becomes a pressing challenge.

As modern society continues to explore and discover the hidden, sometimes unexpected cybersecurity challenges of AI, it will become evident that securing these systems requires a paradigm shift. Conventional security measures alone are insufficient against threats that evolve alongside the models they target. A new approach,one that integrates AI-specific defences, continuous monitoring, and robust validation techniques,is essential to safeguarding AI-driven infrastructure in an increasingly hostile digital environment.

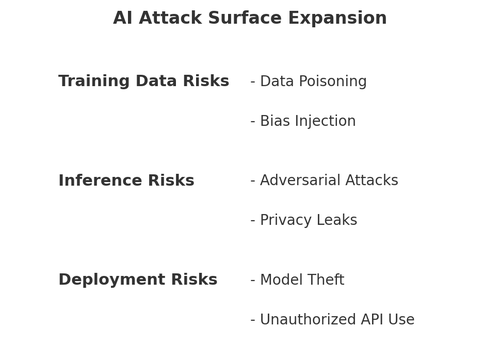

The Expanding Attack Surface of Generative AI

Generative AI has introduced a new era of automation, creativity, and problem-solving, but its rapid adoption has also expanded and thinned the attack surface. Unlike traditional software systems, which rely on predefined rules and static datasets, generative AI models continuously learn and adapt. This flexibility is a double-edged sword,while it enhances performance, it also opens the door to unpredictable vulnerabilities that cybercriminals are quick to exploit.

One of the most pressing concerns is the growing reliance on AI-generated content across industries. From automated financial analysis to AI-assisted medical diagnoses, organizations are embedding generative models into critical decision-making processes at an alarming pace. This dependence makes them lucrative targets for attackers, who can manipulate input data or inject adversarial perturbations to distort outputs. Even minor modifications to input data can cause AI systems to misinterpret information, leading to security breaches or financial losses.

Another emerging threat is the unauthorized access and misuse of generative AI models. Open-source AI frameworks and publicly available pre-trained models have democratized access to powerful AI capabilities in places like Huggingface. However, this accessibility also means that malevolent actors can repurpose these models for malicious activities, such as generating deepfake content, crafting highly convincing phishing emails, or automating large-scale misinformation campaigns. One need only look at how straightforward it is to carry out transfer learning on existing complex models by adding a few new trainable layers, to understand the sea change that is coming. The blurred boundary between legitimate AI applications and their weaponized counterparts makes detection and mitigation increasingly difficult.

As AI systems become more integrated into corporate and government infrastructure, the potential impact of their exploitation grows exponentially. Securing generative AI requires a shift in security strategies,organizations must implement strict access controls, continuously audit AI-generated outputs, and invest in adversarial testing to identify weaknesses before they can be exploited. Without proactive measures, generative AI could become one of the most potent attack vectors in modern cybersecurity.

Adversarial Attacks and the Manipulation of AI Models

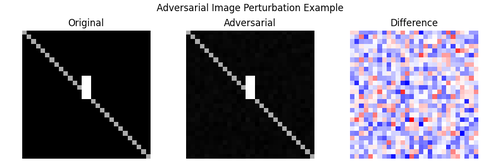

Unlike traditional cyber threats that exploit software vulnerabilities, adversarial attacks target the very nature of how AI models interpret data. By introducing subtle, carefully crafted manipulations to input data, attackers can force AI models to misclassify images, misinterpret text, or even generate entirely incorrect outputs. These attacks are particularly dangerous because they can bypass conventional security measures, exploiting the fundamental way AI systems process information. Steganography, complicates this challenge further.

One other well-documented method is the adversarial example attack, where imperceptible changes to an image or text input cause an AI system to make incorrect predictions.

Beyond deception, adversarial attacks also pose a significant challenge to AI security in military and critical infrastructure applications. In cybersecurity, threat detection models can be manipulated by attackers injecting adversarial noise into network traffic, allowing malicious activities to go undetected. In financial markets, AI-based trading algorithms could be tricked into executing poor investment decisions through adversarially crafted market signals, causing significant financial disruption.

Defending against adversarial attacks requires AI models to be stress-tested under conditions designed to simulate real-world threats. Techniques such as adversarial training, where models are exposed to manipulated inputs during training, can improve their resilience. Additionally, implementing robust anomaly detection systems can help identify and neutralize suspicious patterns before they cause harm. Without these measures, adversarial attacks will remain a potent weapon against AI-driven systems.

Data Poisoning and the Integrity of Training Data

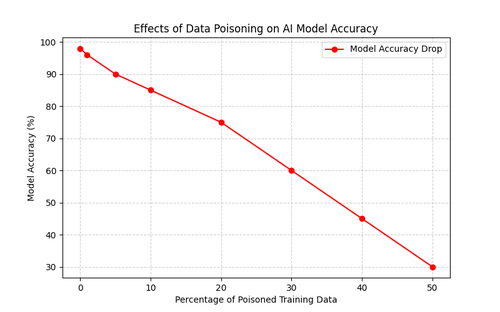

Artificial intelligence models are only as reliable as the data they are trained on. When that data is compromised, the consequences can be far-reaching. Data poisoning is a targeted attack strategy where adversaries inject malicious or misleading data into training datasets, altering an AI model’s behaviour in subtle or severe ways. Since AI models learn from patterns in their training data, even a small percentage of corrupted inputs can significantly distort their outputs.

One of the biggest risks of data poisoning is its stealthy nature. Unlike traditional malware, which can often be detected through signature-based defences, poisoned data blends into legitimate datasets, making it difficult to identify. Attackers can exploit this by introducing biases into AI-driven decision-making systems, subtly skewing outputs over time.

The implications extend beyond cybersecurity. In financial markets, a data poisoning attack could manipulate an AI-powered trading algorithm to misinterpret market trends, leading to incorrect investment decisions. In medicine, poisoned training data in diagnostic AI systems could lead to dangerous misclassifications of medical conditions, impacting patient outcomes. Since many AI models rely on publicly sourced or crowdsourced data, the risk of undetected contamination is a growing concern.

Mitigating data poisoning requires a multi-layered approach. Organizations must implement strict validation processes to ensure training data integrity, using anomaly detection techniques to identify outliers and inconsistencies. Secure data provenance tracking and cryptographic verification methods can further safeguard datasets from tampering. As AI continues to influence critical decision-making across industries, securing its foundation,trusted and verifiable data,will be a cornerstone of AI security.

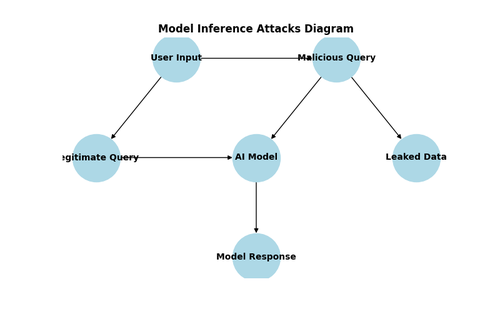

Privacy Risks and Model Inference Leaks

Artificial intelligence systems process vast amounts of data, often containing sensitive personal or proprietary information. While models are designed to generalize knowledge rather than memorize specific data points, research has shown that AI can inadvertently leak information through model inference attacks. These attacks allow adversaries to extract details about the training data, raising serious privacy concerns for organizations and individuals alike.

One of the most concerning risks is membership inference attacks, where an attacker can determine whether a specific data point was part of the model’s training dataset.

Generative AI models introduce an additional layer of risk by producing outputs that may unintentionally expose sensitive information. In some cases, AI-generated text can regurgitate fragments of its training data, leading to potential data leaks. This is especially problematic when models are trained on proprietary datasets or personal communications, as adversaries can exploit these vulnerabilities to extract useful intelligence.

To mitigate privacy risks, organizations must adopt privacy-preserving AI techniques such as differential privacy, which introduces controlled noise to prevent data leakage. Secure federated learning can also reduce exposure by training models locally without centralizing sensitive data. As AI models continue to evolve, ensuring that privacy safeguards keep pace with adversarial tactics will be critical to maintaining trust in AI-driven systems. It seems that zero knowledge proofs will have a large part to play in our future containment and control of AI models.

The Weaponization of AI in Cybercrime

Artificial intelligence is not only a target for cyberattacks but also an increasingly powerful tool in the hands of cybercriminals. As AI capabilities advance, attackers are leveraging machine learning models to automate cybercrime, scale phishing campaigns, and generate highly convincing deepfake content. The same technologies that enhance security and productivity can also be repurposed for deception, fraud, and large-scale misinformation.

One of the most notable applications of AI in cybercrime is the automation of social engineering attacks. AI-powered phishing tools can analyse online behaviour, craft hyper-personalized messages, and adapt in real time to increase their effectiveness. Unlike traditional phishing attempts, which often rely on generic scripts, AI-enhanced phishing can dynamically generate messages that mimic the tone, writing style, and contextual knowledge of trusted contacts, making them significantly harder to detect.

Deepfake technology further amplifies the threat landscape by enabling realistic video and audio impersonations. Cybercriminals have already used deepfake voices to impersonate executives in fraudulent schemes, tricking employees into transferring large sums of money. The ability to create highly convincing synthetic media introduces new challenges for identity verification and digital trust, as traditional authentication methods struggle to keep pace with AI-driven deception. Cryptographic digital identity appears to be one of the key defences in this respect.

Defending against AI-driven cybercrime requires a shift in security strategies. Organizations must employ AI-powered detection tools to counter adversarial AI, continuously monitor for anomalies, and educate users on the evolving tactics of AI-enabled attacks. As AI arms both attackers and defenders, the cybersecurity battle is becoming an intelligence race where only the most adaptive security measures will prevail.

Challenges in Governance and Regulatory Compliance

As artificial intelligence continues to integrate into critical sectors, governments and regulatory bodies are struggling to keep pace with the security and ethical challenges it presents. Unlike traditional software, AI systems do not operate on fixed rule sets but rather evolve through continuous learning, making regulatory oversight more complex. Ensuring compliance while maintaining innovation requires a delicate balance that many industries have yet to achieve.

One of the primary challenges in AI governance is accountability. When AI systems make decisions that result in harm,whether through biased hiring algorithms, incorrect medical diagnoses, or financial miscalculations,determining responsibility becomes a legal and ethical grey area. The lack of transparency in many AI models further complicates regulatory efforts, as even developers may struggle to explain how specific decisions were reached.

Regulatory frameworks such as the European Union’s AI Act and the evolving guidelines from organizations like the National Institute of Standards and Technology attempt to address these challenges by enforcing transparency, bias mitigation, and risk assessments. However, the rapid evolution of AI technology means that regulations often lag behind real-world applications. This gap creates opportunities for malicious actors to exploit regulatory blind spots before laws and enforcement mechanisms can adapt.

To navigate these challenges, organizations must take a proactive approach to AI governance. Implementing strong internal compliance policies, conducting regular audits, and adopting explainable AI techniques can help align AI security with regulatory expectations. As governments refine AI regulations, businesses that anticipate these changes and embed security into their AI strategies will be better positioned to meet compliance requirements while maintaining operational efficiency.

Defensive AI and Security Strategies for Mitigation

As artificial intelligence becomes a target for cyber threats, it must also serve as a defender. AI-driven security solutions are emerging as a critical countermeasure against evolving attack techniques, offering the ability to detect anomalies, identify adversarial inputs, and respond to threats in real time. Defensive AI is not just an enhancement to existing security measures,it is becoming a necessity in an increasingly automated threat landscape.

One of the most effective defensive strategies is the use of AI-powered anomaly detection. Unlike traditional security tools that rely on static rules, machine learning models can analyse vast amounts of network traffic and identify deviations from normal behaviour. This enables organizations to detect previously unknown threats, such as zero-day exploits and sophisticated adversarial attacks, before they cause significant damage.

Another crucial aspect of AI security is adversarial robustness. Researchers are developing techniques to harden AI models against manipulation, including adversarial training, which exposes models to crafted attacks during training to improve their resilience. Other approaches, such as input validation and model monitoring, can help detect and filter adversarial inputs before they influence AI decision-making processes.

Ultimately, securing AI requires a multi-layered approach that combines human oversight with automated defences. While AI can enhance cybersecurity by identifying threats faster than human analysts, it is not infallible. Nor is it immune to compromise itself. Organizations must integrate explainability, continuous monitoring, and rigorous security testing into their AI deployments to ensure that defences evolve alongside emerging threats. As the cybersecurity landscape shifts, defensive AI will play a pivotal role in safeguarding digital infrastructure.

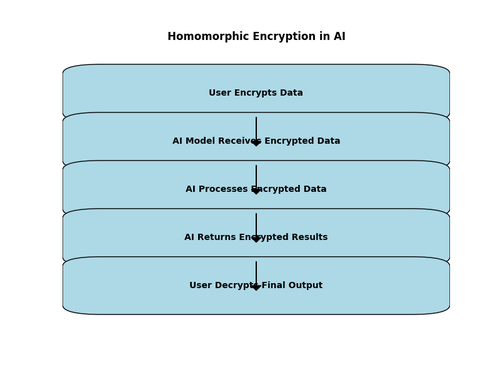

The Role of Cryptography and Secure AI Architectures

Securing artificial intelligence systems requires more than just reactive defences,strong cryptographic techniques and robust architectures must be embedded into AI frameworks from the ground up. As AI-driven applications handle sensitive data and make critical decisions, ensuring confidentiality, integrity, and authenticity is paramount. Without these safeguards, AI models remain susceptible to data breaches, adversarial manipulation, and unauthorized access.

One of the most promising cryptographic approaches in AI security is homomorphic encryption. This technique allows AI models to process encrypted data without ever decrypting it, preventing exposure of sensitive information during computation. In scenarios such as medical diagnostics or financial risk analysis, where data privacy is crucial, homomorphic encryption can enable secure AI processing without compromising confidentiality.

Another essential security measure is the use of secure multi-party computation (SMPC). This allows multiple entities to collaboratively train or query AI models without revealing their private data to one another. SMPC is particularly useful in distributed or decentralised AI applications, where organizations need to share insights while maintaining strict data privacy regulations.

Beyond cryptographic protections, secure AI architectures must incorporate robust access controls, model authentication mechanisms, and tamper-resistant deployment environments. Techniques such as federated learning, where models are trained locally on decentralized devices rather than relying on centralized datasets, further reduce the risks of data leakage and poisoning attacks. As AI systems become more integral to critical infrastructure, adopting these security-first principles will be essential in maintaining trust and resilience in AI-driven environments.

Conclusion - The Future of AI Security in an Evolving Threat Landscape

Artificial intelligence is transforming the cybersecurity landscape, but with its rise comes an unprecedented wave of security challenges. The same models that drive innovation are also vulnerable to manipulation, adversarial attacks, and data poisoning, creating a complex and evolving threat environment. As AI becomes more deeply embedded in critical systems, securing it will no longer simply be recommended,it will become a necessity.

The arms race between attackers and defenders in AI cybersecurity is accelerating. Threat actors are developing increasingly sophisticated methods to exploit vulnerabilities, from leveraging AI to automate cybercrime to crafting attacks that specifically target the weaknesses of machine learning models. Two distinct strands are emerging, namely, the cybersecurity OF AI and cybersecurity WITH AI. For now, security researchers are racing to implement countermeasures that harden AI against these threats, but the gap between innovation and security remains difficult to bridge.

Future advancements in AI security will depend on a combination of defensive AI techniques, cryptographic safeguards, and regulatory frameworks that ensure responsible deployment. Organizations must take a proactive stance, embedding security into AI development processes rather than treating it as an afterthought. The implementation of adversarial training, privacy-preserving techniques, and robust governance models will be key in defining AI’s long-term security posture. As more and more sectors of our economies further digitise, seeking the cost savings AI can offer, the attack surface will increase and will move into areas where cybersecurity has not traditionally been a key consideration.

As AI-driven threats continue to evolve, so must our approach to securing them. The future of AI security will not be determined by a single breakthrough but by a continuous effort to anticipate risks, adapt to new attack vectors, and develop resilient defence strategies. In an era where artificial intelligence is both an asset and a liability, only those who prioritize security will be able to fully harness its potential without falling victim to its vulnerabilities.

Start the discussion

Post Discussion

Reply to

Want to get in touch?

I'm always happy to hear from people. If youre interested in dicussing something you've seen on the site or would like to make contact, fill the contact form and I'll be in touch.

No comments yet. Why not be the first to comment?