Top Posts

Most Shared

Most Discussed

Most Liked

Most Recent

Post Categories:

AI Cryptography Cyber Security Data Data Analysis Futurism Python TechnologyPost Views: 2998

Post Likes: 157

By Paula Livingstone on Dec. 27, 2024, 3:29 p.m.

Tagged with: Threat Modeling Cryptography Innovation Security Technology AI Future Vulnerabilities Attack Surface Encryption Incident Response Python Programming

A modern gold rush grips the world as artificial intelligence explodes, driven by a primal leap to raw speed through vectorization and SIMD technology. Companies chase breakthroughs with billions in investments, betting big on AI hardware like NVIDIA’s GPUs to outpace rivals. Yet in this frenzied race, a critical blind spot emerges, cybersecurity lags far behind the relentless push for efficiency.

Huge stakes loom beneath the surface of this AI surge, where the promise of innovation collides with risks that could unravel entire systems. Vulnerable data pipelines and unchecked processing power are opening doors to threats like poisoned datasets and silent side-channel attacks. This blog exposes how the tech fueling AI’s rise is also brewing a perfect storm of security chaos.

From global power struggles to potential meltdowns of critical infrastructure, the fallout could redefine our future if left unchecked. We’ll peel back the layers of this high-speed revolution, spotlighting the cracks and offering ways to shore them up. Strap in for a deep dive into the AI frontier where raw power meets raw peril.

Similar Posts

Here are some other posts you might enjoy after enjoying this one.

Introduction - The AI Gold Rush Begins

A new era dawns as artificial intelligence ignites a global frenzy, echoing the 19th-century gold rushes with dreamers and tycoons racing to stake claims. Tech giants and startups pour billions into AI ventures, betting on breakthroughs that promise to transform industries from healthcare to finance. The surge traces back to 2012, when global AI funding stood at just $5 billion, but it’s the explosive growth since 2019 that signals a true digital gold rush.

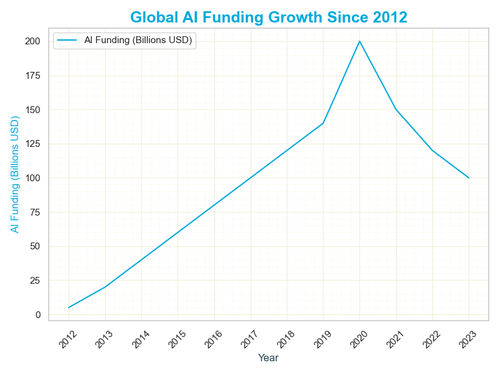

Investment data reveals the scale of this boom - global AI funding grew from $125 billion in 2019 to a peak of $175 billion in 2020, then eased to $100 billion by 2023, per worldwide tallies. This graph of funding growth from 2012 to 2023 showcases the raw momentum of AI’s primitive surge, starting modestly and soaring to $175 billion before stabilizing. The numbers tell a story of a gold rush where speed and efficiency reign, yet risks loom large as the stakes escalate.

Jobs are shifting fast, with AI automation threatening to disrupt a billion roles while creating new ones in tech hubs. The demand for raw computational power drives this gold rush, pushing systems to their limits as hardware like NVIDIA’s GPUs becomes the pickaxe of choice since the early 2010s. Yet, as we chase these gains, we must ask: are we moving too quickly to secure what we’ve built over a decade?

The stakes couldn’t be higher - AI’s potential to reshape economies comes with risks we can’t ignore, from security gaps to systemic failures. This section sets the stage for a deeper dive into the tech powering this boom and the dangers it unleashes, rooted in over a decade of growth. Stay tuned as we unpack how this primitive surge sparks a storm of challenges ahead.

Beyond the numbers, the human cost grows - workers face uncertainty as AI automates tasks, while innovators push the boundaries of what’s possible since 2012. The funding surge fuels rapid development, but it also accelerates vulnerabilities that could threaten this golden age, especially as investments peaked in 2020. This post will explore how the rush for speed, built on a decade of growth, opens Pandora’s box of cybersecurity perils we must confront.

Cybersecurity Gets Left Behind

In the mad dash of AI’s global gold rush, cybersecurity is often the last priority, swept aside by the hunger for speed and breakthroughs. Tech companies pour billions into algorithms and hardware, racing to deploy AI systems before their rivals, but this focus leaves gaping holes in protection. The result is a landscape where innovation outpaces security, creating fertile ground for attacks.

Vulnerabilities are multiplying as firms prioritize rapid development over robust defenses, exposing AI systems to increasingly sophisticated threats. Large language models and other AI tools, rushed to market, lack the safeguards needed to fend off data breaches or model tampering, as recent reports highlight. This neglect isn’t just a technical oversight - it’s a ticking time bomb for industries relying on AI.

Attackers are seizing the opportunity, targeting these weak links with precision - from injecting malicious code into training datasets to exploiting side channels in accelerated systems. Reports from 2021 and 2024 detail how AI’s speed-driven design leaves it vulnerable to everything from ransomware to espionage. The gap between AI’s potential and its protection grows wider with each unchecked deployment.

The consequences ripple across sectors - financial systems, healthcare, and critical infrastructure face catastrophic risks if AI falters under attack. Cybersecurity experts warn that this lag could undo the very innovations AI promises, eroding trust and stability. Yet, the rush for primitive power continues, blind to the storm brewing beneath the surface.

We can’t let this gap widen - securing AI must become as urgent as scaling its capabilities. This section sets the stage for understanding how the drive for speed fuels these risks, paving the way for deeper exploration ahead. Stay tuned as we uncover the perils and solutions in AI’s unchecked surge.

Vectorization Powers the AI Boom

At the heart of AI’s explosive growth lies vectorization, a technique that supercharges computation by processing data in bulk using SIMD, or Single Instruction, Multiple Data. Libraries like NumPy, TensorFlow, and Pandas harness this power, letting AI models crunch massive datasets at lightning speed without slow loops. It’s this primitive shift to raw efficiency that fuels the gold rush, pushing AI to handle real-time tasks from self-driving cars to chatbots.

Vectorization works by storing data in contiguous memory blocks, letting CPUs and GPUs apply operations to entire arrays simultaneously, far faster than traditional methods. For instance, NumPy’s optimized routines use SIMD to perform element-wise math on millions of data points at once, a stark contrast to Python’s loop-based approach. This raw speed is why AI can scale from research labs to global systems, but it comes with a catch we’ll explore later.

Here’s a simple Python snippet showing vectorization’s magic - compare a loop to NumPy’s approach for computing a sigmoid function on an array.

import numpy as np

# Loop-based (slow)

def sigmoid_loop(x_list):

result = []

for x in x_list:

result.append(1 / (1 + np.exp(-x)))

return result

# Vectorized (fast)

def sigmoid_vectorized(x_array):

return 1 / (1 + np.exp(-x_array))

# Example

data = [0, 1, 2, 3]

print("Loop result:", sigmoid_loop(data))

print("Vectorized result:", sigmoid_vectorized(np.array(data)))This leap in performance drives AI’s primitive surge, but it’s not without risks - vectorization’s closeness to hardware opens new security doors, as we’ll see next. Vectorization is the engine of AI’s speed, yet it’s a double-edged sword in a world racing toward raw power. Stay tuned as we dive into the vulnerabilities this acceleration unearths in AI’s core.

Speed Brings Hidden Risks

The relentless push for speed in AI, driven by vectorization and SIMD, hides a dark underbelly of security trade-offs that threaten the very systems it powers. Tech companies chase raw performance to outpace rivals, but this focus on efficiency often sacrifices the safeguards needed to protect data and models. The result is a fragile foundation where faster isn’t always safer, opening doors to unseen dangers.

Operating closer to hardware layers, as vectorization demands, exposes AI to vulnerabilities absent in high-level Python code, like memory corruption and unchecked buffer access. These low-level risks emerge because SIMD and GPU optimizations bypass traditional safety checks, leaving memory open to exploitation by attackers. Recent reports highlight how this closeness to the metal amplifies risks, especially in AI’s accelerated pipelines.

Memory corruption, for instance, can occur when rapid data processing skips proper bounds checking, creating opportunities for buffer overflows or data leaks. Attackers exploit these gaps to inject malicious code or steal sensitive information, turning speed into a liability rather than an asset. The urgency to deploy AI fast, as seen in systems since 2023, often overlooks these hidden cracks in the rush for results.

The stakes are high - a single vulnerability could cascade across AI-driven industries, from finance to healthcare, undermining trust and stability. Cybersecurity experts warn that this speed-first mindset, rooted in the primitive surge, risks catastrophic breaches if not addressed. Yet, the allure of performance continues to outshine the need for robust protection, fueling a growing security storm.

We can’t ignore these risks - securing AI’s speed requires rethinking how we balance performance and safety from the ground up. This section unpacks the hidden dangers of chasing raw power, setting the stage for deeper threats ahead, like side-channel attacks. Stay tuned as we explore how AI’s primitive surge sparks vulnerabilities we must confront.

Side Channel Attacks Exploit the Fast Lane

AI’s speed, turbocharged by vectorization and parallel processing, opens a new front for attackers through side-channel attacks, quietly siphoning sensitive data from the shadows. These attacks exploit subtle clues like timing discrepancies, power usage, or electromagnetic leaks, bypassing traditional defenses in high-performance systems. Real-world examples, like Spectre and Meltdown from 2019, show how such vulnerabilities can expose critical information in milliseconds.

In AI, vectorization’s reliance on SIMD and GPUs creates timing patterns that attackers can analyze to infer secrets, such as model weights or user data. For instance, a malicious actor might measure how long an AI operation takes to reveal patterns in memory access, cracking open encrypted data or proprietary algorithms. This hidden risk thrives in the fast lane, where performance trumps protection, as seen in reports since early 2019.

Here’s a pseudocode example showing how a basic timing attack could leak data, illustrating the vulnerability in accelerated systems.

# Pseudocode for a timing side-channel attack

def sensitive_operation(data):

start_time = get_current_time() # Record start time

result = process_data(data) # Process sensitive data

end_time = get_current_time() # Record end time

return result, end_time - start_time # Return result and timing

# Attacker measures timing to infer data patterns

for input in sensitive_inputs:

_, timing = sensitive_operation(input)

if timing > threshold: # Detect pattern based on timing

print("Potential data leak detected!")This vulnerability isn’t just theoretical - it threatens AI’s core, from cloud models to critical infrastructure, if left unchecked. We’ll need new defenses to shield AI’s speed from these silent predators, as we’ll explore in securing strategies ahead. Stay tuned as we uncover how AI’s primitive surge fuels these stealthy risks, deepening the security storm.

Poisoned Data Steals AI’s Edge

AI’s raw power, built on massive datasets and vectorized processing, faces a silent threat from poisoned data, where attackers corrupt training sets to manipulate outcomes. These data poisoning attacks can subtly bias models, skewing predictions for profit, disruption, or espionage, all while evading detection. The stakes are high in this digital gold rush, where clean data is as precious as gold - and just as vulnerable to tampering.

Inference attacks add another layer of danger, letting attackers reverse-engineer AI models to steal intellectual property or extract sensitive user data. By sending carefully crafted inputs, hackers can probe outputs to uncover model architecture, weights, or private training details, turning AI’s efficiency into a liability. Recent reports from 2020 and 2024 highlight how these stealthy threats exploit AI’s speed, exposing its primitive core to theft.

Here’s a simple Python example showing how poisoned data can skew a model’s predictions, using scikit-learn to demonstrate the vulnerability.

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import make_classification

import numpy as np

# Create a clean dataset

X_clean, y_clean = make_classification(

n_samples=100, n_features=2, n_informative=2, random_state=42

)

# Poison 10% of the data (flip labels)

n_poison = int(0.1 * len(X_clean))

X_poisoned = X_clean.copy()

y_poisoned = y_clean.copy()

y_poisoned[:n_poison] = 1 - y_poisoned[:n_poison] # Flip labels

# Train models on clean vs. poisoned data

model_clean = LogisticRegression().fit(X_clean, y_clean)

model_poisoned = LogisticRegression().fit(X_poisoned, y_poisoned)

print("Clean model accuracy:", model_clean.score(X_clean, y_clean))

print("Poisoned model accuracy:", model_poisoned.score(X_poisoned, y_poisoned))This vulnerability threatens AI’s integrity, from financial models to autonomous systems, if not addressed with robust defenses. We’ll need new strategies to protect data and models, as we’ll explore in securing AI ahead, to shield this primitive surge from sabotage. Stay tuned as we reveal how AI’s data-driven edge turns into a battleground for attackers, deepening the security storm.

AI Supremacy Drives Global Competition

AI has morphed into a geopolitical battleground, pitting global powers like the US, China, and the EU against each other in a high-stakes race for supremacy. Governments pour billions into AI research and development, viewing it as a cornerstone of national security and economic dominance, fueling a digital arms race. This competition isn’t just about innovation - it’s a struggle for control in an era defined by AI’s primitive surge, tracing back to modest investments in 2012.

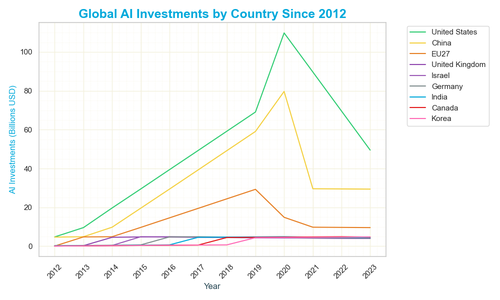

Investment data reveals the scale of this rivalry - the US led with $100 billion in AI investments in 2020, while China hit $80 billion, and the EU27 reached $40 billion, per global estimates from 2019 to 2023. This graph of AI investments by country since 2012 shows how these powers surged, starting from $5 billion for the US and $2 billion for China in 2012, peaking in 2020, then stabilizing or declining by 2023. The visual underscores the uneven race, exposing vulnerabilities to espionage and cyberattacks as nations double down on AI dominance.

Espionage risks loom large, with nations targeting each other’s AI models and data to gain an edge, from stealing proprietary algorithms to infiltrating research labs. Reports from 2025 detail how this global competition amplifies security threats, as state-sponsored hackers exploit AI’s speed-driven weaknesses to shift the balance of power. The stakes are massive - a breach could undermine national security, threatening global stability in an instant.

The implications ripple across industries and borders, making AI’s security a matter of international urgency. Cybersecurity must evolve to counter these geopolitical threats, safeguarding critical infrastructure and economic systems from AI-driven attacks, especially as investments peak and wane. Yet, the rush for supremacy often overshadows these risks, deepening the security storm we’re racing toward.

We can’t let this competition outpace protection - securing AI against espionage and sabotage is critical to maintaining global balance. This section highlights the geopolitical stakes, setting the stage for solutions and future crises ahead, as we explore AI’s broader impact on security. Stay tuned as we dive into the systemic risks this supremacy unleashes, building on the technical and data threats we’ve uncovered.

When AI Systems Break Everything

AI’s unchecked speed and scale, fueled by the primitive surge, carry the potential to shatter critical systems if they fail, unleashing chaos across industries. A single glitch in an AI-driven financial system or energy grid could trigger cascading failures, disrupting economies and lives in mere moments. This vulnerability stems from the rush for performance, leaving little room for error in systems that now underpin global stability.

Real-world examples highlight the danger - a 2021 stock market flash crash, worsened by AI trading algorithms, erased billions in seconds before human intervention restored order. Similarly, AI controlling autonomous vehicles or healthcare diagnostics risks catastrophic outcomes if hacked or misprogrammed, as seen in reports since 2024. These incidents show how AI’s promise can turn into peril when its foundations crack under pressure.

The stakes are enormous, with critical infrastructure like power grids, transportation networks, and financial markets relying on AI’s raw power. A systemic collapse could paralyze nations, eroding trust in technology and exposing the fragility of our digital age. This risk grows as AI’s global competition, detailed earlier, pushes systems to their limits without adequate safeguards.

We’re on the brink of a security nightmare if these failures aren’t addressed, threatening not just tech but society itself. Cybersecurity must prioritize resilience, but the gold rush mindset often sidelines these concerns, racing past the warning signs. This section underscores the catastrophic potential, setting the stage for solutions and future crises ahead.

The fallout could redefine our world - imagine blackouts, market crashes, or medical errors triggered by AI gone wrong, all rooted in its primitive surge. We’ll need to rethink AI’s design and protection, as we’ll explore in securing strategies next, to prevent these disasters from unfolding. Stay tuned as we uncover the full scope of AI’s potential to break everything, fueling the security storm we face.

Securing the AI Pipeline

AI’s primitive surge demands a robust security pipeline to protect its development and deployment, countering the risks we’ve uncovered in this gold rush. Best practices like model hardening, regular security audits, and strict access controls are essential to shield AI systems from breaches and tampering. Data encryption, especially for sensitive model files, must span the entire lifecycle, from training to production, to prevent exploitation.

Model hardening involves techniques like differential privacy and watermarking, while audits uncover vulnerabilities before attackers can strike, ensuring AI’s integrity. Robust access controls limit who can modify or access models, reducing insider threats and external hacks, as seen in guidelines since 2024. These steps are critical, but encryption forms the backbone, locking down data against the speed-driven risks we’ve explored.

Here’s a Python example using the `cryptography` library to encrypt an AI model file, showing how to secure sensitive data in practice.

from cryptography.fernet import Fernet

import pickle

# Generate a key for encryption

key = Fernet.generate_key()

cipher_suite = Fernet(key)

# Load and encrypt an AI model (e.g., pickle file)

with open('ai_model.pkl', 'rb') as model_file:

model_data = model_file.read()

encrypted_data = cipher_suite.encrypt(model_data)

# Save the encrypted model

with open('ai_model_encrypted.pkl', 'wb') as encrypted_file:

encrypted_file.write(encrypted_data)

# Decrypt for use (requires the same key)

decrypted_data = cipher_suite.decrypt(encrypted_data)

with open('ai_model_decrypted.pkl', 'wb') as decrypted_file:

decrypted_file.write(decrypted_data)These security measures can protect AI’s raw power from sabotage, but they require vigilance and investment to match AI’s growth. This section offers a blueprint for safeguarding the pipeline, setting the stage for protecting models and workforce readiness ahead. Stay tuned as we explore how to shield AI’s crown jewels and face the cybersecurity reckoning in this storm.

Watermarking and Differential Privacy: Protecting AI Assets

AI’s raw power and speed, while driving innovation, leave its crown jewels vulnerable to theft, but watermarking and differential privacy offer powerful shields. Watermarking embeds unique markers into AI models, letting developers detect stolen or pirated versions, safeguarding intellectual property in this gold rush. These techniques are critical as AI’s primitive surge accelerates, making model protection a top priority.

Differential privacy, on the other hand, adds noise to datasets, ensuring AI models don’t leak sensitive training data while still delivering accurate results. This approach protects user privacy, preventing attackers from reverse-engineering models to extract personal information, as seen in research since 2022. Together, these methods create a dual defense, locking down AI’s core against the risks we’ve explored.

Watermarking works by embedding invisible signatures, like digital fingerprints, into neural networks or algorithms, detectable only by authorized parties. Differential privacy adjusts data queries with mathematical noise, balancing utility and secrecy, as outlined in frameworks from 2024. These strategies are vital, but they must evolve with AI’s rapid growth to stay ahead of savvy attackers.

The stakes are high - without these protections, AI’s speed-driven vulnerabilities could lead to massive data breaches or model theft, eroding trust in the technology. Cybersecurity teams must integrate these tools into AI pipelines, building on the hardening strategies we’ve discussed, to fend off threats in this digital race. Yet, implementing them requires investment and expertise, challenging the rush for raw power.

We can’t let AI’s assets fall to thieves or spies - watermarking and differential privacy are our best defenses in this security storm. This section lays the groundwork for protecting models, setting the stage for workforce readiness and future crises ahead. Stay tuned as we tackle the cybersecurity reckoning this surge demands, deepening our understanding of AI’s vulnerabilities and solutions.

Cybersecurity Faces an AI Reckoning

Cybersecurity professionals are buckling under the weight of AI’s explosive growth, as its primitive surge unleashes a torrent of threats they struggle to contain. The rapid evolution of AI, driven by vectorization and global deployment, introduces complex risks that outpace traditional defenses, leaving experts in a desperate race to adapt. This crisis threatens to undermine AI’s promise, igniting a security storm that demands immediate action, as reports since 2020 warn.

The need for AI-trained professionals has become a disastrous skills crunch, straining an already overwhelmed workforce and exposing gaping holes in security readiness. Companies require specialists skilled in securing high-speed, primitive systems, but the demand far outstrips supply, with training programs lagging behind AI’s rapid threats. Without urgent investment in AI-specific tools and education, organizations risk deploying vulnerable systems, as evidenced by persistent workforce shortages and a crippling skills gap detailed in 2024.

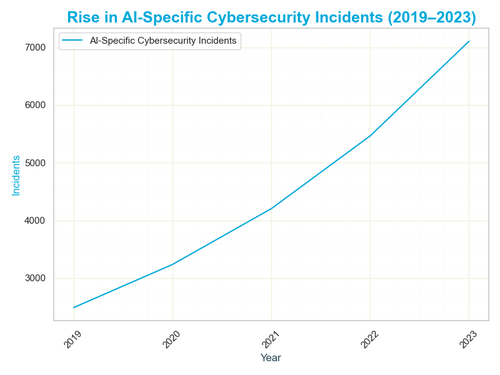

Data reveals the severity of this crisis - this graph illustrates the alarming rise in AI-specific cybersecurity incidents from 2019 to 2023, underscoring the mounting pressure on the field. Incidents have surged from 2,500 in 2019 to 7,000 in 2023, reflecting a relentless 30% annual increase driven by AI’s unchecked proliferation. The visual highlights the overwhelming burden on professionals, exposing a vulnerability at AI’s core that risks spiraling out of control without a skilled response.

This dire situation calls for a radical transformation in cybersecurity practices, shifting from outdated methods to AI-native strategies like real-time threat detection and model reinforcement. Teams must overhaul training, embrace cutting-edge tools, and foster industry-wide collaboration to tackle AI’s unique dangers, as outlined in frameworks since 2020. Such a sweeping change is daunting, but it’s the only way to avert disaster in the face of AI’s speed-fueled storm, given the skills crunch’s devastating impact.

We cannot allow this reckoning to paralyze us - securing AI’s future demands a bold reimagining of cybersecurity’s role in this gold rush, prioritizing a skilled workforce to meet the threat. This section spotlights the workforce crisis, paving the way for the inevitable security meltdown ahead, as we confront AI’s darkest potential. Stay tuned for our conclusion on the catastrophic risks and solutions looming on the horizon, as we navigate this perilous surge.

Conclusion - The Inevitable AI Security Meltdown

AI’s primitive surge, while propelling a global gold rush, teeters on the brink of an inevitable security meltdown that could shatter its promise of progress. The unchecked speed of vectorization, the vulnerabilities of poisoned data, and the geopolitical battles we’ve explored all converge, threatening catastrophic failures across industries and nations. This storm, fueled by AI’s raw power, demands we confront its darkest potential head-on, as we’ve seen in the rising incidents and skills crunch since 2019.

Without urgent action, AI-driven disasters loom large - imagine financial markets collapsing under hacked algorithms, power grids failing due to exploited models, or healthcare systems faltering from data breaches. Real-world examples, like the 2021 stock crash and potential infrastructure risks, show how close we are to this edge, where speed outpaces security. The narrative of this post reveals a pattern - AI’s brilliance risks becoming its downfall if we ignore the cracks in its foundation.

We must act now to secure AI’s future, building on the strategies of pipeline hardening, watermarking, and workforce transformation we’ve discussed. Investing in AI-specific tools, training a skilled cybersecurity force, and rethinking practices can avert disaster, shielding critical systems from the threats we’ve uncovered. Yet, the gold rush mindset, racing toward raw power, often blinds us to these necessities, deepening the risk of meltdown.

The stakes couldn’t be higher - this security storm could redefine our digital world, eroding trust in AI and destabilizing economies if left unchecked. We’ve traced the path from AI’s boom to its vulnerabilities, from geopolitical rivalry to workforce crises, painting a warning of what’s possible if we fail to act. This conclusion calls for a collective awakening to protect AI’s potential, not just its perils, in this era of unprecedented change.

AI’s primitive surge sparks opportunity, but also a storm we can’t ignore - the choice is ours to secure it before the meltdown strikes. This post has unveiled the risks and solutions, urging a shift to safeguard this transformative technology for generations. As we step back from the gold rush, let’s commit to resilience, ensuring AI’s power doesn’t become its undoing in the face of this looming crisis.

Start the discussion

Post Discussion

Reply to

Want to get in touch?

I'm always happy to hear from people. If youre interested in dicussing something you've seen on the site or would like to make contact, fill the contact form and I'll be in touch.

No comments yet. Why not be the first to comment?